When AI Becomes the Front Door

Redesigning Product for Generative Interfaces

TL;DR

Generative AI is becoming the entry point to many products — not just a backend tool or internal feature.

Product teams need to rethink UX, success metrics, and team structures to keep up with intent-driven experiences.

A chatbot isn’t always the right interface — the best experiences embed AI into the natural flow of work.

PMs who succeed here will focus less on novelty and more on removing friction between user intent and outcomes.

The Shift Is Already Here

Generative AI isn’t just a backend superpower anymore — it’s becoming the front door of the user experience.

Today, products like Notion AI, Perplexity, and Klarna’s shopping assistant aren’t hiding AI in a settings tab. They’re starting with it. The interaction begins with intent, not navigation. The user says what they want, and the product responds in context — sometimes better than a traditional interface ever could.

The traditional UI stack — menus, dropdowns, toolbars — is giving way to interfaces that listen, think, and act.

A big moment happened for us at Figure when we saw Klarna launch their automated support agents. Lending products often require human intervention — people have questions, documents need review, and there are moments where support feels critical to moving the process forward.

But watching Klarna’s approach inspired a new direction. We realized that many of the common questions and core workflows — especially around document verification — could be handled better by AI. That insight led us to an intensive effort to overhaul the support experience, integrating AI more deeply into both borrower interactions and backend review processes. The result? A better experience for users and relief for our operations team, which had been overwhelmed with inbound support.

This shift isn’t just about new technology. It’s a redesign of how we guide users, deliver value, and scale product operations.

When the Interface Starts Thinking for the User

Generative interfaces flip the script. Instead of designing fixed steps and flows, product teams are now building systems that interpret intent and dynamically generate the next best step — often bypassing the need for traditional UX at all.

What’s changing:

From guided workflows → to adaptive systems

From user navigation → to AI-led outcomes

From task completion → to goal fulfillment

Historically, great products solved this through smart UX and a lot of design thinking. Take Turbotax, for example. I didn’t work on it directly, but at Intuit, it was well known how much work went into the filing experience — teams carefully designed every interaction, every edge case, and every possible variation in a tax situation.

But it was a massive undertaking. You needed huge teams to manually map all the scenarios and walk users through them.

Now, imagine applying an LLM to that experience. Instead of rigid flows, you could guide the user to exactly the right step based on what they’ve done before, what they’ve uploaded, or how they describe their situation — dramatically simplifying the experience while handling edge cases more flexibly.

Another example from my own experience: At Booking, I was set to lead efforts around multi-leg trips — a feature we believed had strong potential. The idea was to help users plan more complex journeys by linking destinations. But the challenge was always predicting the next best destination and sequencing the trip in a way that made sense. Without strong signals or user input, it was hard to know where to take the experience next.

With today’s AI capabilities, though, this is entirely different. Now we can start with user intent: “I’m going to Tokyo and want to explore two nearby cities.” AI can take past travel behavior, preferences, proximity, and even seasonality into account to suggest incredible itineraries. That’s a level of product thinking we just didn’t have access to before — and it’s what makes generative interfaces so powerful.

Redefining Success: New Metrics for Generative UX

Once AI starts taking the wheel in your product, traditional metrics can quickly become misleading.

Time on site? Lower might be better.

Click-through rates? Irrelevant in a dynamic flow.

Funnel conversion? Hard to track when steps are fluid and personalized.

Instead, the new indicators of success include:

Time to value: How quickly does the user get what they need?

Response quality: Was the AI accurate, confident, and helpful?

Trust: Do users accept the AI’s suggestions, or fall back to manual flows?

Resolution rate: Did the AI complete the task without human intervention?

As these interfaces take shape, you’ll need to rethink your analytics stack and your definition of success. Outcomes matter more than process.

Your Team Needs to Build Differently

You can’t just slap a chatbot onto your product and call it a day. These experiences require cross-functional, AI-native teams.

To build well, you need:

PMs who understand model behavior, prompting, and fallback UX

Designers who think in conversation flows and trust states

Prompt engineers to shape LLM responses and behavior

Model ops / ML leads to manage fine-tuning, latency, and performance

This isn’t a feature team bolted to your existing product squad. It’s a core product function that spans user experience, system design, and intelligence.

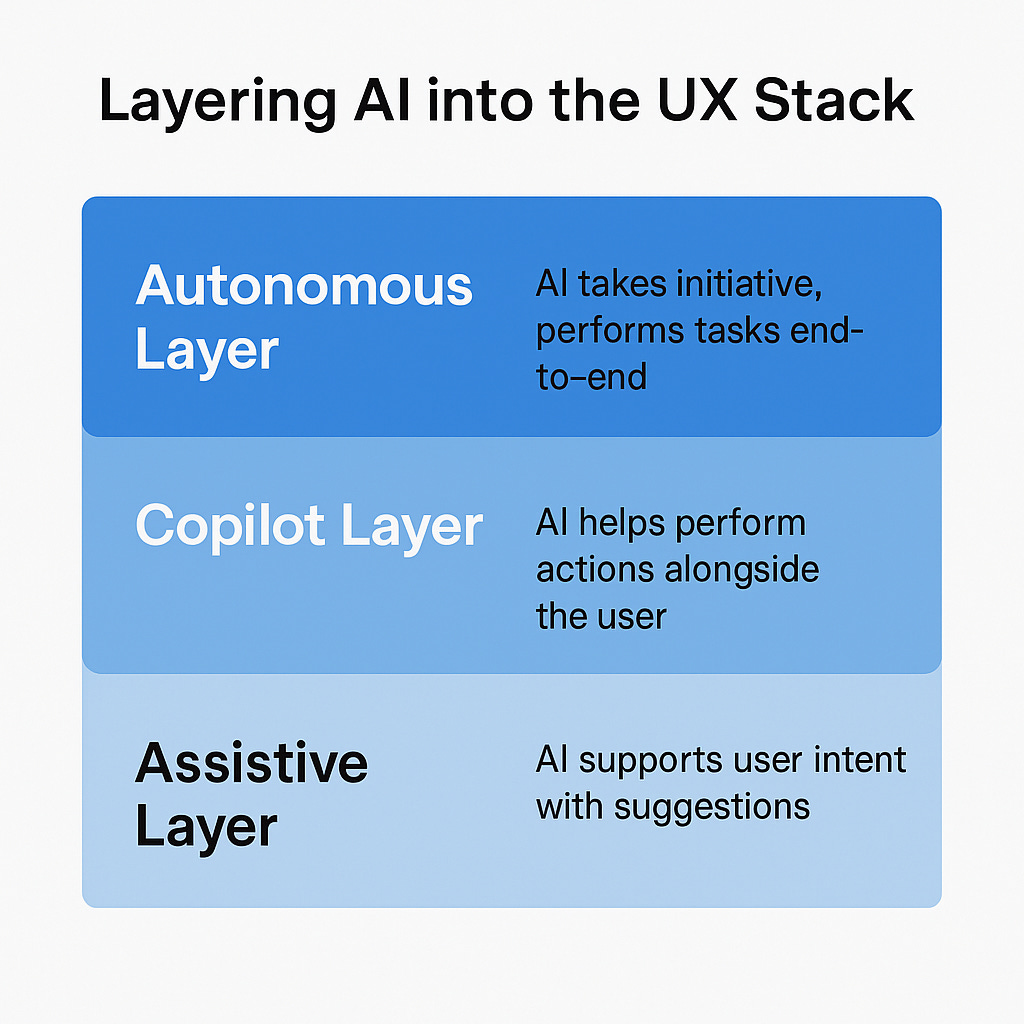

A Framework: Layering AI into the UX Stack

Not every product needs a full conversational interface. Here’s a simple way to think about integrating AI progressively:

1. Assistive Layer

AI provides lightweight support — autocomplete, suggestions, smart defaults.

E.g., Gmail’s Smart Compose or Notion’s writing helpers.

2. Copilot Layer

AI helps the user complete tasks — recommending steps or creating draft content.

E.g., GitHub Copilot writing code as you work.

3. Autonomous Layer

AI handles tasks end-to-end with minimal input.

E.g., AI that generates travel itineraries or books a complete trip based on a few cues.

Most products should start in the assistive or copilot layers, gradually moving toward autonomy as confidence and data improve.

Not Every Product Needs a Chatbot — Pick the Right Interaction

Here’s a mistake I’m seeing across the board:

Teams assume LLMs = chatbots.

But chat is just one interaction pattern — and often not the best one.

Take Miro, for example.

Instead of giving users a chatbot to ask for help, Miro lets you work directly on the canvas. You can highlight a set of sticky notes and ask AI to summarize them. You can select a section of your diagram and generate ideas from that context.

It’s fluid, embedded, and exactly where the user’s attention already is.

No need to context-switch to a new interface

No long prompt required

No guessing what to type into a bot

This kind of embedded, workflow-native AI is what most products should aspire to.

The question isn’t “How do we add a chatbot?”

It’s “How do we help users do less work and get better outcomes?”

Sometimes that means chat. But often, a well-placed AI action or intelligent nudge is far more effective.

Final Thought: Don’t Just Add AI — Rethink the Experience

This moment in product development isn’t just about smarter backends or faster content generation. It’s about rethinking the entire contract between user and product.

Where once we designed screens and flows, now we design systems that respond to intent.

Where we once tracked clicks and completions, now we track trust and outcomes.

The question isn’t whether to use AI. It’s how to use it to remove friction, guide users, and evolve faster than ever.

PMs who embrace this shift — and stay clear-eyed about when chat helps vs when it gets in the way — will build experiences that feel modern, magical, and human.

✅ Key Takeaways

Generative AI is now part of the user experience, not just a backend tool.

Not every product needs a chatbot — embedded, contextual AI often works better.

Rethink metrics — focus on speed to outcome, trust, and value over clicks or time-on-page.

Your team structure must evolve, with PMs, designers, and ML engineers working in tight loops.

This space will move fast — optimize for adaptability, not just shipping something “AI-powered.”